ESG and GenAI: What investors need to know

The extraordinary impact of GenAI has sent investors scrambling to understand all the implications of this phenomenon. The Revaia team recently explored the opportunities being created by the ecosystem of tools and services emerging around GenAI.

Now we want to turn our attention to some of the risks. There are real material issues associated with technologies powered by Large Language Models (LLMs), but so far there is very little awareness of the full scope of these possible threats. In this case, it’s important to emphasize the word “possible,” because the commercial versions of this technology are still relatively young and evolving rapidly, and so there is still a lot of research and investigation being done to truly understand the full impact and trade-offs.

As is often the case with the advent of revolutionary new platforms, there is a core tension between wanting to go full throttle on innovation so that society and the economy benefit from these advantages, and the need to think profoundly about the fallout and unintended consequences that could occur. At Revaia, we believe that it’s important that any new product or service be a net benefit for the world. That matters for the long-term health of the planet and the people who live on it, but it also matters concretely for investors. If investors fail to fully account for vulnerabilities inherent in GenAI-related assets, they could later be blindsided by developments that could undermine the business.

Making such assessments at this stage of the GenAI funding frenzy isn’t easy, especially when there is such fierce competition for deals at extremely early stages and valuations are exploding. Let’s just give one hypothetical before we dive into some of the details.

There is much talk about the environmental impact of GenAI due to the massive computing power required to train LLMs (more on this in a moment). But for both regulators and investors to make a full analysis, we must also know what offsets occur: Does the product cause a reduction in other areas, such as people traveling for work? Are there other secondary changes (good or bad) that ripple out that nobody had foreseen?

Given that many of the companies seeking investment may not even have a fully developed product, the complete answers may be unknowable at this point. Yet investors can’t just close their eyes. What’s needed at this point is a framework for asking questions and identifying the aspects that must be carefully observed as a company scales. At the very least, investors should know that the founding team is aware of these issues, has values that are aligned with the need to ensure that technology is a force for good, and has a strategy for monitoring and adapting when necessary.

There is another advantage for investors who are willing to engage in a deep reflection here. As we have seen in the past with topics such as privacy and the environment, the problems that arise can also create opportunities for companies that develop products and services that mitigate them. For GenAI, tools that provide accountability, auditability, and transparency could be important for complying with regulations.

To explore this risk framework, let’s use ESG as a guide:

Environmental

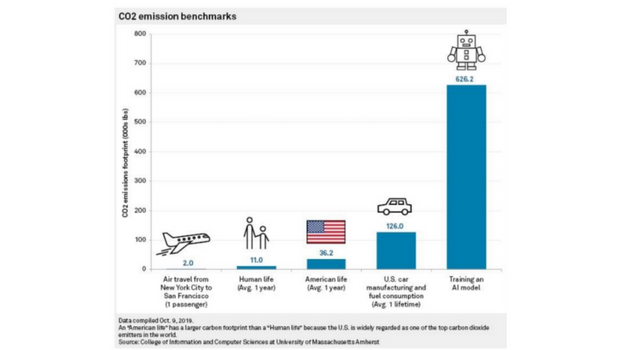

If we look at CO2 emissions, the initial signs are not good. A study published in the journal Science in 2019 estimated that training a single large AI model can emit as much as five times the lifetime emissions of an average car, with the emissions ranging from roughly 284 tons to 4,626 tons of CO2. Another study by researchers at the University of Massachusetts estimated that training the transformer model used in the OpenAI GPT-3 model could emit around 283 tons of CO2.

While the training is intensive, over time the real carbon impact is likely to be the massive volume of usage.

This is bound to accelerate an already troubling trend. Since 2012, the amount of computing power used for deep learning research has been doubling every 3.4 months, according to OpenAI researchers Dario Amodei and Danny Hernandez. This equates to an estimated 300,000-fold increase from 2012 to 2018, far outpacing Moore’s Law, which states that the overall processing power for computers will double every two years.

This gives you a sense of the scope of the problem.

There are some important variations within this. It’s important to know how compute-intensive the training of an LLM is. If there is a single LLM at the core of a service, it might be resource-intensive to create, but less so over time for each query. On the other hand, if a new LLM has to be trained for each customer or vertical or application, then that could have a higher environmental impact over time. And these carbon emissions vary widely between an LLM that generates text versus one that generates images.

Given the climate risk, regulation and public scrutiny around emissions-intensive models could hinder the sector’s growth. In addition, considering the high energy consumption, volatility in the price of power could hurt the bottom line.

Also, to truly assess the risks here, companies may need to look beyond their own operation. Increased regulation to decarbonize supply chains could challenge the growth of the IT sector and data-intensive software models. Across those supply chains, the depletion of raw materials such as rare metals needed to produce the chips or the land use required for data centers could challenge the AI sector.

In the short term, companies are finding ways to optimize the demands their applications place on these models to ease the cost and environmental impact. That said, the use of such LLMs is increasing exponentially and there is no overarching solution that addresses the rising emissions.

For now, investors should ask their companies if they perform an annual carbon footprint assessment and what strategies they have to reduce that. Those companies, in turn, can exert some pressure on suppliers and partners, but that may be limited. Because the computer power and chips are concentrated among a few larger cloud suppliers and semiconductor companies, it’s going to be hard to force them to change.

Best practices:

- develop energy-efficient algorithms

- optimize hardware for AI workloads

- increase the use of renewable energy sources in data centers

- explore techniques like model compression and quantization to reduce computational requirements

Social

Several aspects of GenAI need to be considered here.

The first is data, both the sourcing and the delivery. It’s reasonable to ask if the data being used to train the models is reliable. If lots of biased data is used, that could lead to more biased output, which in turn could exacerbate discrimination.

Next, there are issues with data privacy and copyright infringement. Currently, there are no safeguards on copyrights. However, creators of original content are putting pressure on GenAI companies regarding the use of their content to train models. In recent months, for instance, The New York Times sued OpenAI and Microsoft over the use of its content to train models, but the newspaper is also negotiating a possible licensing deal.

In addition, the data that is delivered can pose serious threats to security if it uses sensitive information.

Running parallel to these technical issues are the human ones. There is some concern about the social impact if GenAI causes the loss of low-wage jobs. And GenAI also must address topics like diversity and inclusion. To spot some of the data issues we just highlighted, a diverse team is key to asking questions about possible biases in the data or algorithms, including cultural ones that could result from being trained mostly in English rather than local languages.

This will remain a challenge because when it comes to diversity and inclusion, the IT sector has poor gender parity, and that only gets worse for advanced technologies such as AI/ML. The industry will need a strategy for developing the talent needed for these jobs, or face labor shortages.

Fortunately, we’ve seen some companies embrace the social challenge. One GenAI startup we spoke with recently has hired a Chief Impact Officer. This person’s role is to focus on diversity and ask the product development team about the design of the product and the algorithms to ensure that diversity is built in. This is no easy task, as Google Gemini demonstrated recently when attempts to address bias led to image creation that touched off a firestorm when it appeared to produce racially inaccurate images of historical figures.

Best practices:

- identify vulnerabilities, and, where appropriate, incidents and patterns of misuse

- diversify team members to ensure unbiased training data

- implement ethics oversight aimed at fostering responsible AI development and deployment practices

- develop robust privacy, security measures, transparency, and accountability processes

Governance and Regulation

The EU's Corporate Sustainability Reporting Directive (CSRD) went into effect at the start of this year and requires detailed reports on ESG. GenAI will inevitably be a part of that, and we’ll watch closely to see how much transparency these rules create around this technology.

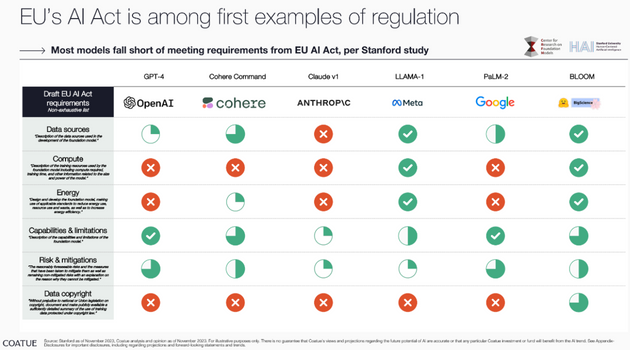

Meanwhile, the European Parliament recently voted to approve the EU AI Act. This landmark legislation establishes obligations for providers and users depending on the level of risk from artificial intelligence. Any system considered a threat to people will be banned, with a particular emphasis on protecting children from manipulative content. Certain types of GenAI systems will be subject to tough disclosures and reviews.

Put those two pieces of legislation together, and one can see that Europe is taking the lead on regulating these issues. It’s a signal that there is more awareness regarding the ESG elements of GenAI, and a willingness to consider the risks.

While both regulatory acts are complex, a fundamental aspect is a requirement for transparency and respect of human rights. In terms of governance, this means that companies must have internal controls and tools in place to track the required information and a system for reporting it. Those efforts should be complemented by a process that analyzes the data for insight to reduce carbon impact and improve any areas where diversity is lacking.

Even though regulation gets a lot of attention, there are also many voluntary initiatives related to GenAI’s ESG challenges. The Data for Good association has developed a guide for organizations wanting to explore the implications of GenAI and possible solutions to ESG issues. In addition, the United Nations has convened a high-level Advisory Body On Artificial Intelligence, G7 nations have agreed to a voluntary AI code of conduct, and a group of top Silicon Valley VCs signed a commitment to build AI responsibly.

Best practices:

- adopt AI for Good principles

- ensure that AI and ML technologies are used for sustainable practices

In highlighting these risks, we want to make clear that we remain intensely optimistic about GenAI. It may well turn out to be one of the most disruptive technologies of this generation and its benefits across a wide range of industries are only just now coming into focus. But it’s also too easy to get caught up in the hype and the hunt for quick returns and lose sight of the bigger picture.

If the risks are ignored until the moment when their harm becomes so large that it provokes a backlash, it might be too late to make course corrections that would have been less painful earlier in the journey. Being vigilant and making the appropriate tradeoffs now is the right thing to do for the planet. It’s also the savvy thing to do for the smartest investors.